Last time, I gave a rough outline of a hybrid approach for obtaining the dimensions of a conceptual space that uses both multidimensional scaling (MDS) and artificial neural networks (ANNs) [1]. Today, I will show our first results (which we will present next week at the AIC workshop in Palermo).

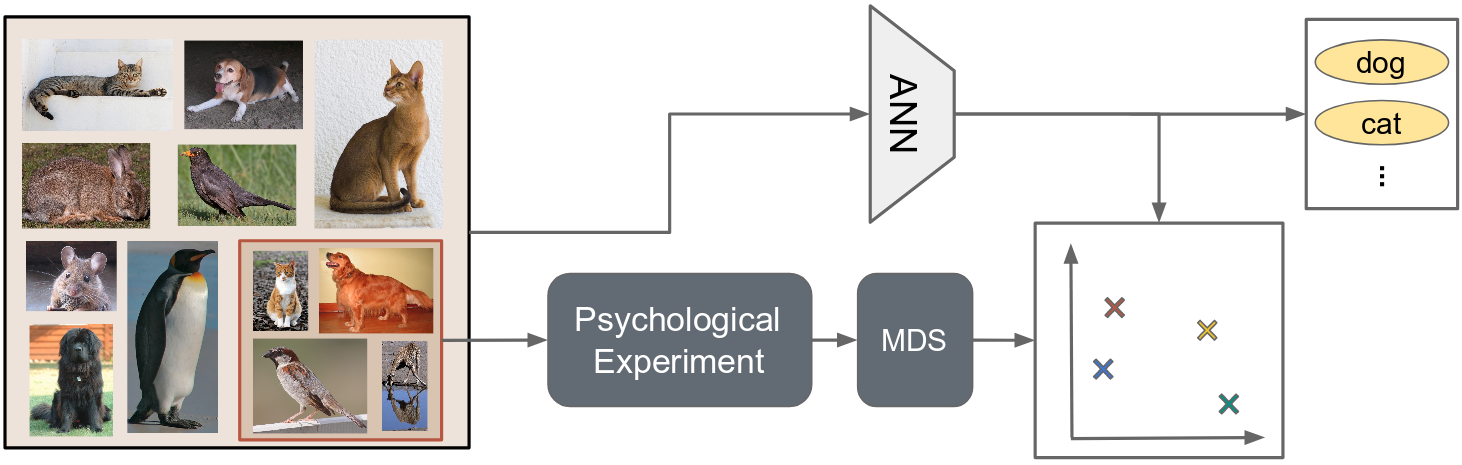

But first, as a quick recap, take a look at Figure 1 which illustrates the overall workflow of our hybrid approach: We obtain psychological similarity ratings for a subset of our overall data set, run MDS to get a conceptual space, and train an ANN to learn the mapping from image to MDS point. An additional secondary task like classification is introduced in order to fight overfitting.

In order to check whether this proposed approach works, we used already existing data sets and tools:

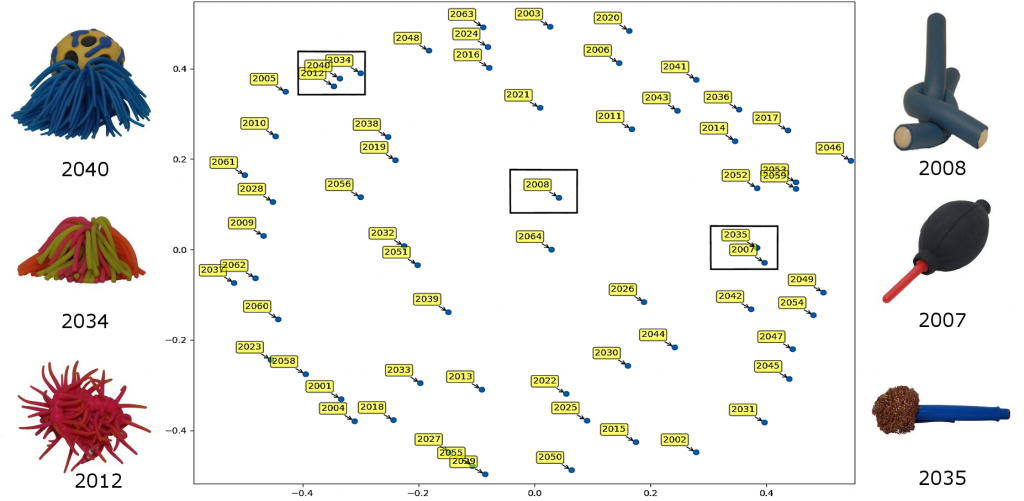

We decided to use the NOUN data set [2], which consists of 64 images of novel and unusual objects, along with pair-wise similarity ratings obtained in a psychological study. Figure 2 shows some example images and their positions in a two-dimensional conceptual space derived by MDS. We also investigated a four- and an eight-dimensional conceptual space.

Training a deep neural network from scratch takes a lot of time and can be relatively complicated. In order to avoid this additional work, we used the Inception-v3 network [3] which had been pre-trained on the ImageNet data set. We removed the output layer of the network and replaced it by a simple linear regression. We kept all other weights of the network fixed and only trained the linear regression to map onto the points in the conceptual space.

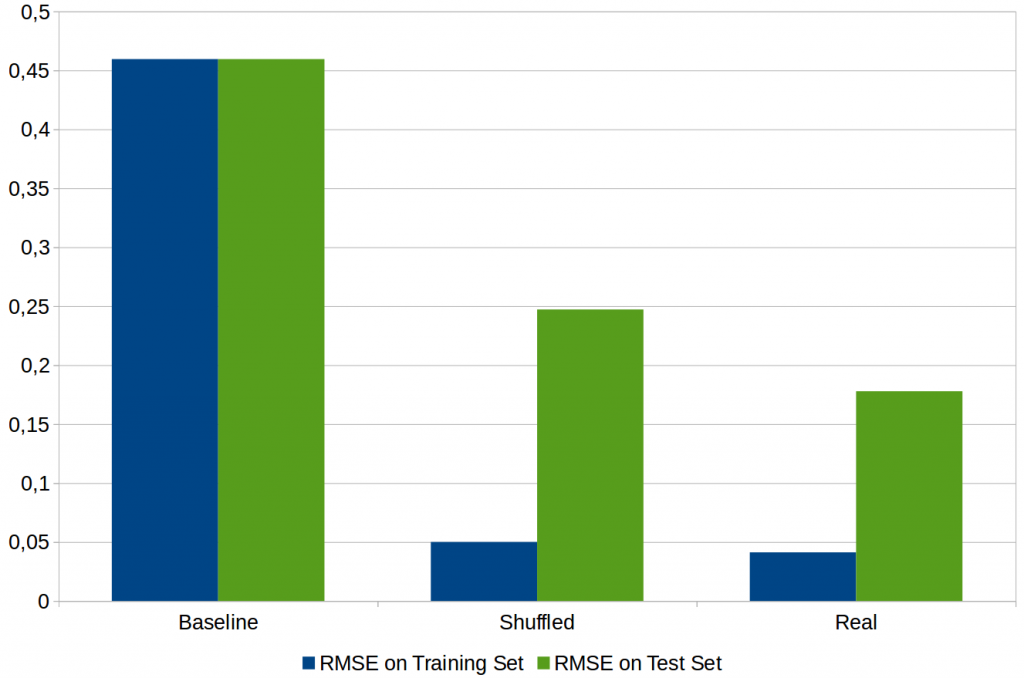

Figure 3 shows the results of our study for a four-dimensional conceptual space. The best baseline we found always predicts the origin (i.e., the point with the coordinates (0, 0, … , 0)) and has a quite high RMSE (i.e., it performs considerably bad). For our regression, we analyzed two variants: One of them learned the correct mapping to the MDS space (“Real”) and another one learned a modified mapping, where the assignment of images to points had been shuffled (“Shuffled”). The results show that learning a mapping to the real conceptual space seems to be easier than learning a mapping to an arbitrary space. This indicates that the similarity structure of the conceptual space is useful in learning processes. Unfortunately, we also have to note the relatively large difference between the training and the test set which is an indication that the linear regression overfits the data (i.e., it almost perfectly memorizes the mappings seen during the training phase, but it fails to make good guesses for unseen data points).

Reducing the amount of overfitting will be one of the focus topics in continuing this line of research. Nevertheless, we think that our first results are promising and that this hybrid approach is worth to be investigated further.

References

[1] Lucas Bechberger and Elektra Kypridemou: “Mapping Images to Psychological Similarity Spaces Using Neural Networks” AIC 2018. Link

[2] Horst, J. S. & Hout, M. C.: “The Novel Object and Unusual Name (NOUN) Database: A Collection of Novel Images for Use in Experimental Research” Behavior Research Methods, 2016, 48, 1393-1409.

[3] Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J. & Wojna, Z.: “Rethinking the Inception Architecture for Computer Vision” Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016, 2818-2826.