In one of my previous posts, I’ve shown a little overview diagram of my PhD research. One component of this diagram was called “language games” and so far I have not explained what that means. Well, today I’m going to give a short introduction into this topic.

Language games [1] focus on the question of “how can language come into existence?”, i.e., “What are possible mechanisms that allow different individuals to come up with a shared vocabulary that they can use to communicate about things in the world?”. I admit that this sounds a bit abstract, so let me illustrate the problem with an example:

Imagine that two children (let’s call them Alice and Bob) are sitting at a table drawing pictures with crayons. Alice wants to use the blue crayon next, but unfortunately all the crayons lie at the other end of the table so she can’t reach them. However, they are well within reach for Bob. The most convenient way to solve this problem is for Alice to make Bob pass the desired crayon. If Alice wants to solve this problem by using language, she needs to say at least one word that indicates which crayon she is interested in (in our case, that would be “blue”). If both children already know all color terms of the English language sufficiently well, the situation is easily resolved: Alice knows that “blue” is the correct word she should use, Bob understands what the word “blue” refers to and (given that he tries to be helpful and not mean) he can pass the correct crayon to Alice. But what if the two kids didn’t know any color terms yet? This is where language games enter the stage.

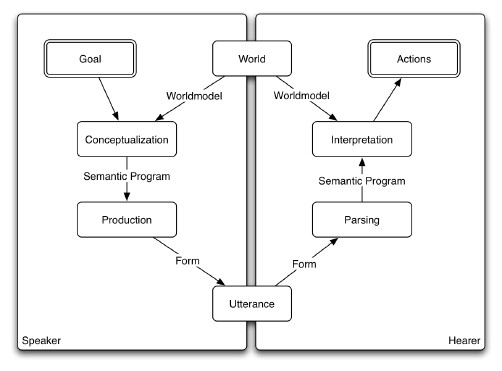

Figure 2 illustrates the general mechanism of such a language game. There are always two individuals involved: a speaker and a hearer (in our example the two children). Both observe the part of the world that is currently relevant (in our case the different crayons of the table). The speaker selects one of the objects as its goal (here: the blue crayon). In a next step, this object needs to be conceptualized, i.e., the speaker must figure out what kind of category or concept this object belongs to (in our case the concept of all blue things). The speaker then tries to produce an utterance that describes this concept (in English, that would be the word “blue”). The hearer listens to this utterance and tries to parse it, i.e., to decide which category/concept this word is referring to. Finally, the object that is being talked about needs to be identified (“interpretation” step). In our case, the hearer needs to decide which of the crayons is the one that the speaker is interested in. The resulting action would in our case simply consist of handing over the selected crayon.

If both the speaker and the hearer categorize/conceptualize the world in a similar way and use words in a similar way, this interaction will likely be successful: Alice gets the correct crayon and is happy about that, and Bob is glad that he was able to help. But what if something goes wrong? It could be that the two individuals use words in different ways (e.g., for Alice the word “blue” might refer to all blue things, whereas for Bob it might refer to all red things). It could also happen, that the two individuals have different conceptualizations of the world. For instance, the speaker might have two different categories for blue things and for green things, whereas for the hearer there is only one overall category for everything that is blue or green.

In these cases, the interaction might not be successful: Bob might hand over the wrong crayon (e.g., the yellow one), Alice would then be reasonably unhappy (due to not getting the desired result) and Bob might also be disappointed (because the attempt to help failed). In the language games framework, the assumption is that the speaker then indicates the “correct” solution. In our example, this could happen by standing up, walking to the crayons and picking up the blue one. This provides some feedback to both individuals: Alice observed what Bob thought was the correct object, and Bob observes which object Alice was actually referring to. One can now assume that both the speaker and the hearer adjust their categorization and their vocabulary a bit in order to be more successful in the future: Alice could for instance learn that the word used in this interaction is maybe more useful to describe a different category/concept (in the above case, all yellow things), whereas Bob might learn that word should be associated with the category/concept intended by Alice (i.e., all blue things). Moreover, the concepts themselves might change: If Bob has only one big concept for all green and blue colors, he cannot distinguish between blue and green crayons. For him, blue and green crayons are just the same thing, so he will have trouble in selecting the correct one if there is both a green and a blue one on the table. If the communication frequently fails because of this, Bob might at some point discover that it is useful to split this concept of “green or blue” up into two separate concepts: one for all blue things and one for all green things.

As Luc Steels and his collaborators were able to show (see e.g. [1]), one can simulate these types of interactions in a computer. If the update rules (i.e., the way in which Alice and Bob adapt their strategy after a failed communication) are set up correctly, the population of digital agents converges on a common conceptualization and a common vocabulary. This common vocabulary can then be used to efficiently communicate about the environment.

Final question: What does this have to do with my research on concept formation? Originally, language games were a means to investigate cultural and evolutionary processes in the development of languages. They were used to look at the question “How can two individuals communicate with each other if they don’t have any common language and thus need to start from scratch?”

What I’m more interested in is a side-effect of these language games: If an interaction fails, the individuals will change both their conceptualization and their vocabulary in order to be more efficient in the future. The final conceptualization of each individual is thus influenced and constrained by these interactions with other individuals. It is exactly these constraints that I am interested in: An individual that plays language games all the time will develop a conceptualization that is useful in these language games, i.e., that is useful in communication. I think that this can provide a useful incentive for an artificial system to learn “good” concepts – arbitrary concepts won’t be sufficient, only concepts that are useful in the agent’s interaction with the world will be selected. This provides thus some (weak) sense of embodiment or context for the concept formation problem.

References

[1] Steels, Luc. The Talking Heads experiment: Origins of words and meanings. Vol. 1. Language Science Press, 2015.