I’ve recently shared my first (and unfortunately relatively disappointing) results of applying InfoGAN [1] to simple shapes. Over the past weeks, I’ve continued to work on this, and my results are starting to look more promising. Today, I’m going to share the current state of my research.

As already mentioned in the last blog post, the original InfoGAN implementation by Chen et al. was not very convenient to use, mostly because it often crashed before finishing training. I have therefore transitioned to TFGAN in order to get a more stable system. Although there are some example code snippets available online, it took me quite some time to get everything up and running with my rectangle data set.

With the new implementation, training is much smoother – there are barely any crashes and thanks to the newer TensorFlow version, which is compatible with the graphics card drivers at my university, I can train the networks quite fast.

Remember that I started with a dataset of rectangles where the only two changing features are the width and the height of the rectangle. My goal was to unsupervisedly rediscover these two dimensions from the data set. After the first few test runs of my new implementation, I however noticed that the network often learned a dimension for the overall size of the rectangle instead of two dimensions for width and height. Thinking about this, I realized that you can also perfectly describe a rectangle by its overall size and its aspect ratio.

When I was skimming through Gärdenfors’ book on conceptual spaces [2] to look something up for a different project, I stumbled over Chapter 4.10 where he describes a psychological experiment: The experimenters used also stimuli with varying widths and heights, but the results of the study indicate that participants tended to use the aspect ratio and the overall size of the stimulus when making similarity judgments. So learning the dimensions of size and aspect ratio is also not completely unreasonable. From a mathematical perspective, you can start with any two of the four dimensions (width, height, size, and aspect ratio) and compute the remaining ones based on them.

I’m currently playing around with different hyperparameters (e.g., the learning rate) and many runs don’t give very useful results. Looking through hundreds of images and judging their interpretability is not a very efficient way of finding promising examples because it is very time consuming. In order to automate this to some extent, I’ve set up a linear regression that tries to predict the four dimensions width, height, size, and aspect ratio from the latent code extracted by the network. Those networks, where the regression is more successful for one or two of the dimensions (i.e., where the regression error is smaller) are more promising candidates.

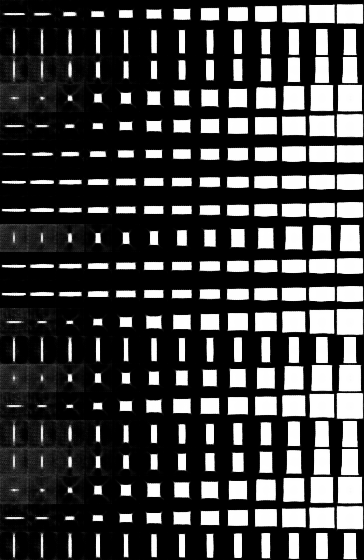

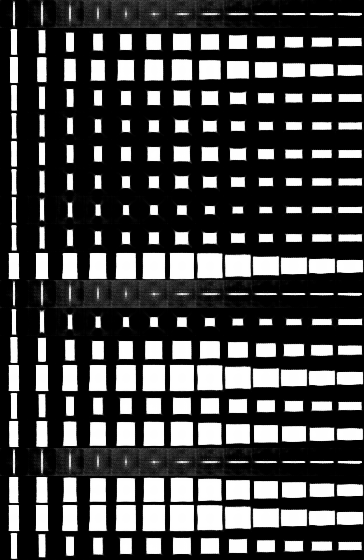

So far, I didn’t have the chance to do a thorough analysis, but there are some promising-looking examples, like the one depicted in Figures 1 and 2 (where each line is a different sample and the value of the latent dimension is increased from left to right).

As you can see, the first dimension seems to correspond to the rectangle’s overall size and the second one to its aspect ratio. Unfortunately, such nice results are still quite rare. I need to analyze the results as well as my setup in more detail in order to better understand what’s going on and to increase the reproducibility of the results. Nevertheless, things are looking better now and I’m looking forward to further progress on this.

If you’re interested in the code, feel free to take a look at the corresponding GitHub project, but please be aware that it has not yet been properly documented.

References

[1] Chen, Xi, et al. “InfoGAN: Interpretable Representation Learning by Information Maximizing Generative Adversarial Nets.” Advances in Neural Information Processing Systems, 2016

[2] Gärdenfors, Peter. Conceptual Spaces: The Geometry of Thought. MIT Press, 2000.